Meta’s Multi-Token Prediction — What Does It Mean for AI Models & Future of Coding?

In a recent report, researchers at Meta, Université Paris-Saclay, and Ecole des Ponts ParisTech suggest improving the accuracy and speed of AI large language models (LLMs) by “making them predict multiple tokens simultaneously.”

This contradicts the classic structure of auto-regressive language models, which have been designed to predict one token at a time.

Next-Token Prediciton Model — Limitations & Benefits

“Token” is a piece of information or ideas we give in prompts.

The current large language models such as GPT and Llama are trained with a next-token prediction loss.

Meaning? The model — AI — is given a sequence of tokens and must predict the next one.

How are AI Models Trained?

When we give AI a “token” — or piece of information, it adds the predicted token to the input and repeats the process, one token at a time. By doing this over and over on large corpora of text, the model learns general patterns that allow it to generate coherent passages of text.

However, where it seems like a reasonable model to generate “logical” responses. Researchers have observed that it’s limited in “acquiring language, world knowledge, and reasoning capabilities.”

What is Multi-Token Prediction?

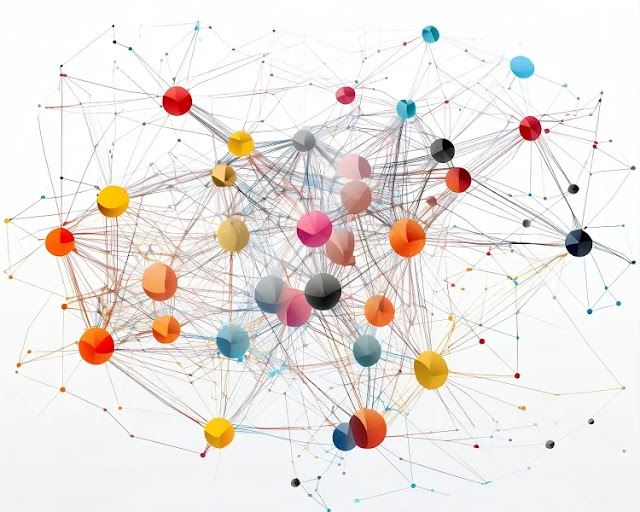

Unlike single-token prediction, where the process is repeated over time as tokens are predicted one by one, multi-token prediction allows the AI LLM to predict several future tokens from each position in the training corpora at the same time.

It does not require extra training time or memory overhead.

The multi-token prediction language model is based on the Transformer architecture used in most LLMs, although with some modifications. The model uses the main structure of the Transformer though instead of a single output, it has multiple independent output heads, one for each token it wants to predict.

“While cost-free and simple, multi-token prediction is an effective modification to train stronger and faster transformer models,” the researchers write.

Multi-token prediction also makes models up to 3 times faster at inference time across a wide range of batch sizes, according to the researchers.

Future of Coding with Multi-Token LLM

AI LLM, which has the ability to predict multiple tokens requiring less training and more memory overhead, will prove to be a valuable resource for coders.

How? Tools with such models can analyze multiple code elements at once, provide suggestions faster, and even help debug code more quickly. They can more confidently rely on such tools for repetitive tasks, get quicker solutions, and focus more on complex problems.

AI could also help automate some coding processes, making it easier for developers to write clean and efficient code. Therefore, it will become a powerful assistant for coders, enhancing productivity and speeding up workflows — unlike ever before.

Comments

Post a Comment